Illusion

How might we externalize internal sensory phenomena by blending motion, sound, and visuals into an immersive performance visual?

Type

Performance Visual

Role

Interaction Design · Creative Coding

Tool

Ableton Live · TouchDesigner

Concept

I've always been curious about the mysterious lights I see when I close my eyes and press on them, which I later learned are called phosphenes. These eerie, self-generated lights in the dark felt both deeply personal and universally shared. I wanted to explore how visual technology could externalize such internal sensory experiences.

I soon discovered atmospheric ghost lights: natural phenomena like will-o’-the-wisps known for their elusive and mysterious nature, similar to phosphenes. This connection between internal and external phenomena inspired me to create a live performance interface that integrates motion, sound, and visuals in real time. The goal was to design an interactive stage visual where performers' movements and inputs shape ghostly light projections, blending personal sensory experiences with collective storytelling.

Research

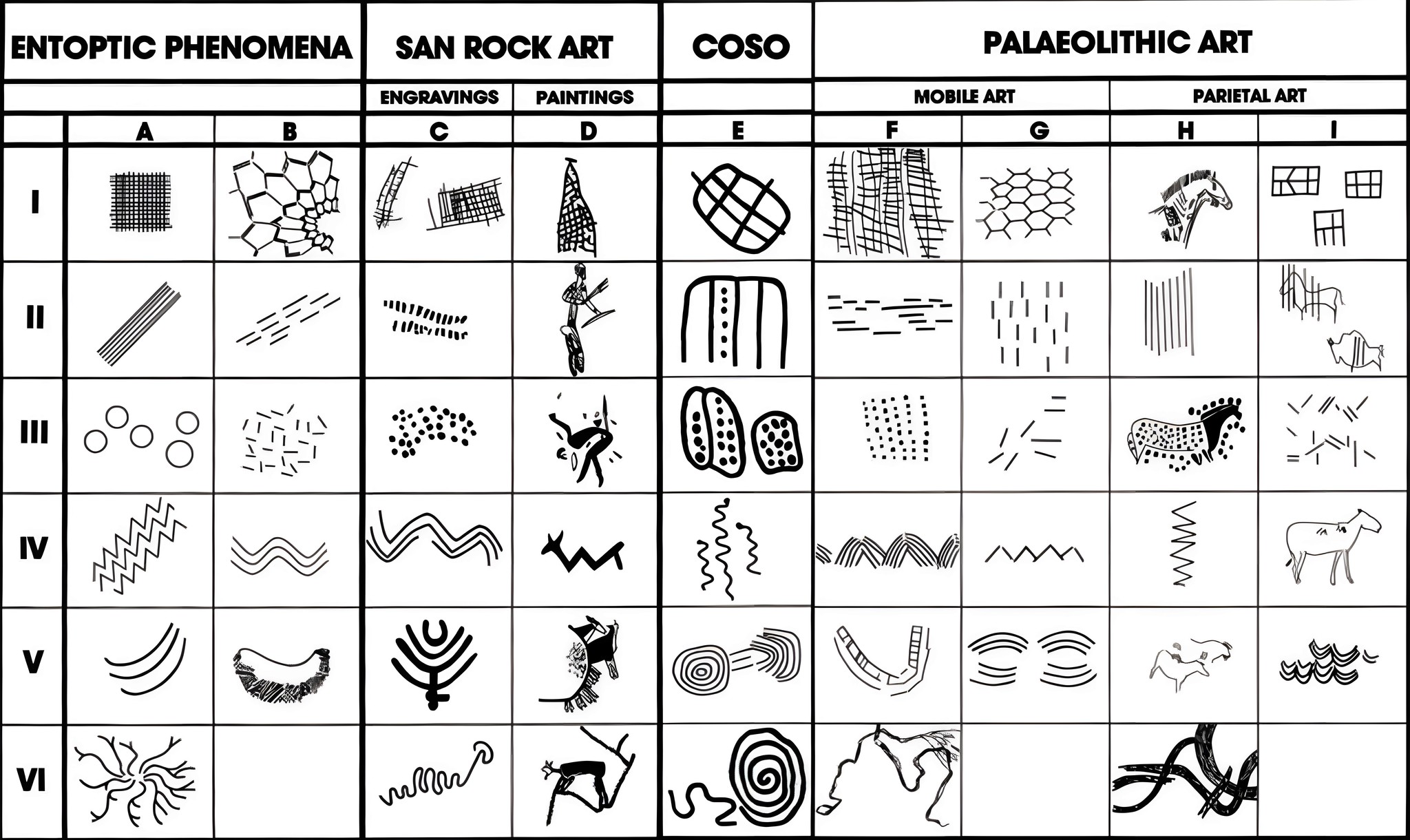

To better understand the correlation between the internal visual cortex and external natural phenomena, I researched Professor Jack Cowan's lecture on geometric hallucinations and their connection to brain architecture. Referencing archaeologist David Lewis-Williams, he argued that many geometric patterns found in cave paintings, such as blobs, dots, and lattices, are hallucinatory. He explained that early humans may have visualized these patterns in dark caves illuminated by flickering lights, a condition known to stimulate geometric hallucinations. This connection between environmental stimuli and internal visual processes fascinated me, as it illustrated how the brain transforms internal experiences into externalized imagery.

"From rock and cave art to the architecture of the visual cortex" by Professor Jack Cowan

Cowan further explained that these patterns, known as entoptic forms, originate from the brain's visual cortex. This idea highlights how the brain's architecture shapes perception. The notion that geometric hallucinations represent the brain "revealing itself to itself" resonated deeply with the concept of this project, where imagination and sensory phenomena are externalized into visual form. This research provided a visual reference and conceptual framework for using OpenGL Shading Language (GLSL) to transform these internal experiences into dynamic, real-time visuals for a performance interface.

Process

I began prototyping with Leap Motion, exploring how body data could create generative art. Initially, I focused on using hand movements to dynamically manipulate abstract visuals. As the project progressed, I transitioned to Kinect Azure, which provides full-body motion tracking, enabling more immersive and expressive interactions tailored for live performance.

Leap Motion prototype demo

Technology and Features

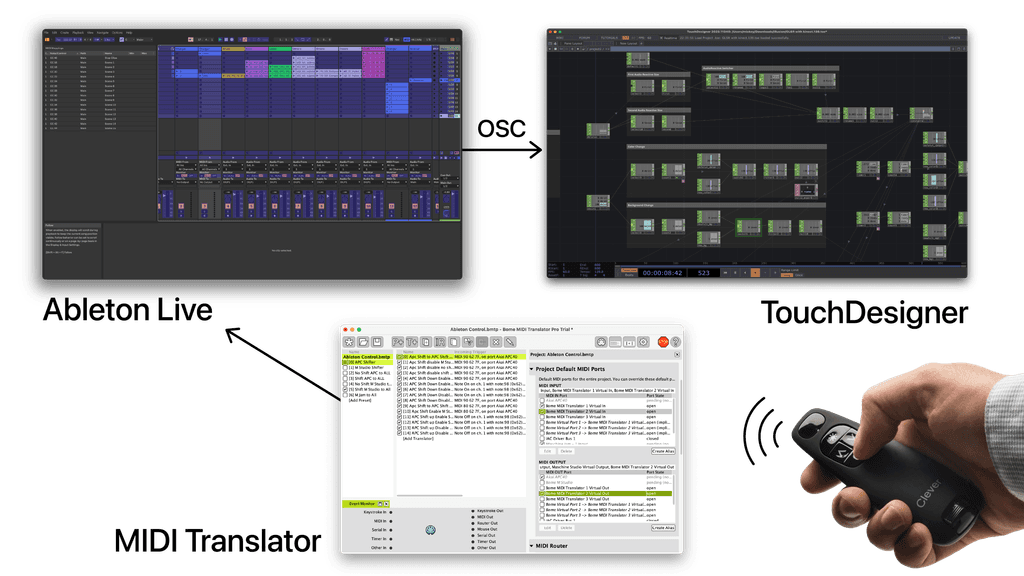

To integrate sound into the experience, I connected Ableton Live and TouchDesigner using LiveGrabber, which enables OSC communication between the platforms. I then used Bome MIDI Translator to control the audio and visuals in real-time, allowing changes in sound to influence visuals. I mapped the presentation remote as a custom MIDI controller to send the signals to Ableton Live, which triggers corresponding changes in the visuals within TouchDesigner.

Performance System Design

Outcome

The final system merges motion tracking, sound design, and real-time visuals into a live performance. Performers' movements control phosphene-inspired visuals synchronized with sound. The project connects sensory experiences with user interaction, offering a dynamic stage visual design for live performance.

A 10-minute version of the live performance was publicly performed at the 2024 Designing Interfaces for Live Performance Final Show and later adapted into an interactive installation installed in the Emerging Media Studio.

Interactive installation version in the Emerging Media Studio

Next Steps

The biggest challenge was balancing abstraction with clarity, ensuring the visuals felt ethereal but still emotionally resonant. I plan to refine motion tracking for smoother interactions and expand the visual library with more geometric patterns. Adding environmental inputs like ambient light or sound could make the system more responsive.

Credits

Mickey Oh

Mentor

David Rios

Documentation

Angy He, Leah Bian