Celestial Odyssey

Type

VR·XR Experience

Role

Programming

Tool

WebGL · Meta Quest

Preview

Concept

Inspired by the complexity and beauty of celestial systems, this project reimagines how we explore the cosmos through virtual interaction. While researching, I came across NASA's Space Place website for Kids, which explains the Solar System and offers a few simple flash games for educational purposes. While some looked interesting, I realized they wouldn't have engaged me as a kid because the interactions were limited to static visuals.

I decided to develop a 3D interactive universe that feels intuitive and engaging. Using VR devices, my goal was to create an immersive environment where users can dynamically explore stars, constellations, and galaxies, bridging the gap between education and interactive storytelling beyond traditional 2D visuals.

Screenshot from https://spaceplace.nasa.gov

Prototyping

I used Three.js as the framework for building the 3D scene due to its flexibility and ability to create real-time, interactive visuals in the browser. To manage performance while rendering numerous stars and planets, I implemented BufferGeometry, which efficiently stores geometry data in the format used for GPU communication via WebGL.

I found an example by Fernando Serrano in the Three.js documentation that demonstrated particles detecting nearby and drawing lines between one another based on their distance. I expanded this technique to create random constellations, modifying the distance range logic and positioning. I also integrated HDR backgrounds to simulate cosmic textures and integrated them to build a prototype where users can interact with the celestial elements with mouse input.

First prototype includes HDR background, constellations, and mouse interaction

Outcome

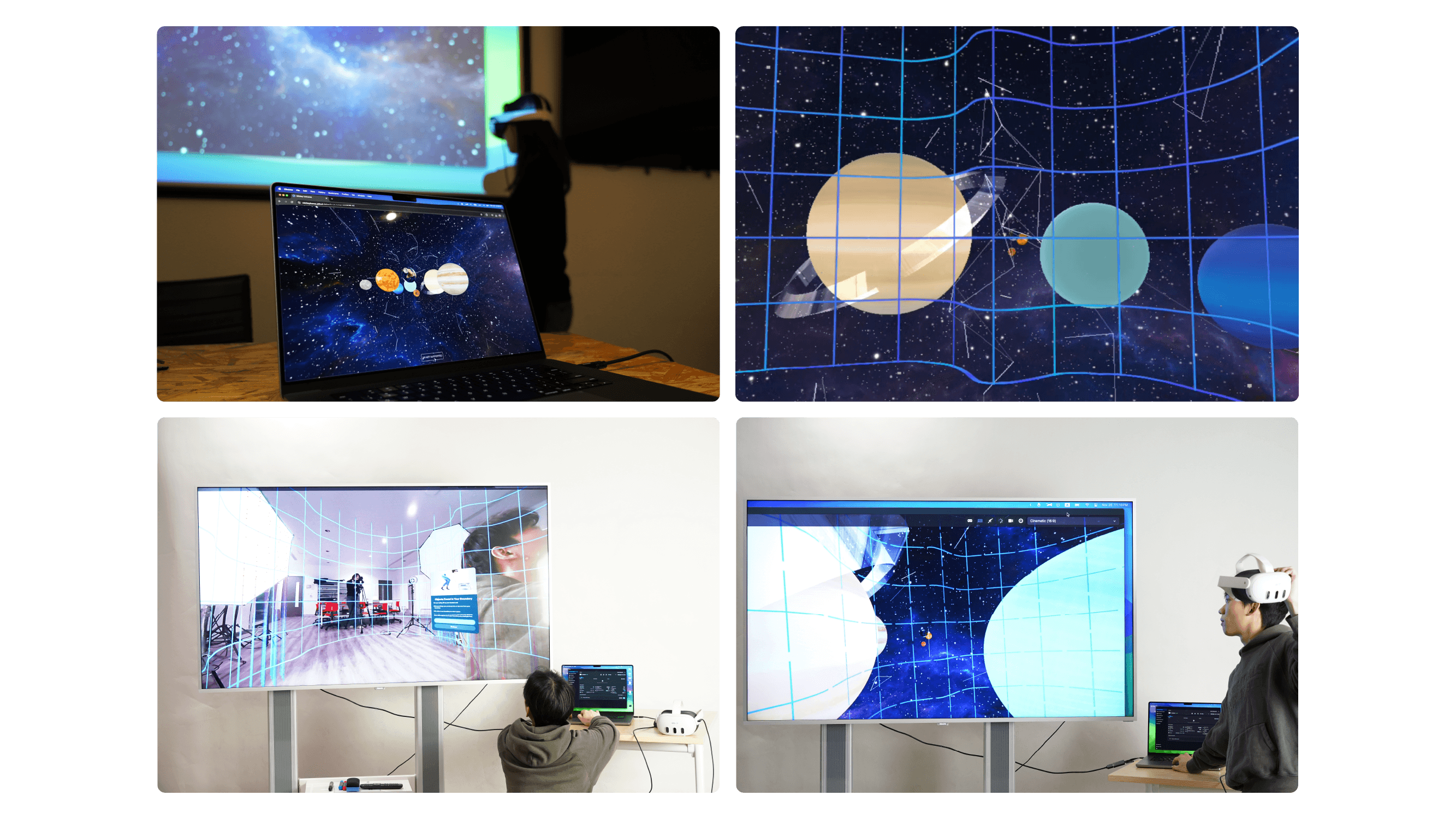

I converted the final version of the project into a VR/XR experience using the WebXR Device API. I used WebXRManager to enable XR, track VR session states, and retrieve controllers for interaction. I set up and tested the VR environment with Meta Quest.

I implemented core XR functionality by combining Three.js and raycasting. Controllers dynamically interacted with objects in the scene, with raycasters detecting intersections between the controllers and celestial elements. I added trigger-based interactions through event listeners, allowing users to navigate the cosmic universe with full-body motion and intuitive hand gestures.

VR/XR setup using Meta Quest 3

Final version user testing

Final version was displayed in the IMA Fall 23 End-of-semester Show

Next Steps

The biggest challenge was optimizing performance while maintaining visual fidelity in the XR environment. For future development, I plan to refine the raycasting logic for smoother interactions, expand the visual library with more detailed planetary textures, and implement GLSL shaders for enhanced realism.

Seeing kids enjoy my project during the show was rewarding, aligning with my original intention of making this project more engaging for younger audiences. I want to build on this educational purpose by adding collaborative features that allow multiple users to explore the virtual universe and incorporate additional inputs, like voice commands or touch interactions.

Credits

Interaction Design / Software / Code

Mickey Oh

Mentor

J. H. Moon